float

浮点数

- http://0.30000000000000004.com/

- https://github.com/erikwiffin/0.30000000000000004

- http://blog.jobbole.com/95343/

文章讨论了计算机中的浮点运算问题,给出了各种不同语言的浮点输出。

浮点数运算

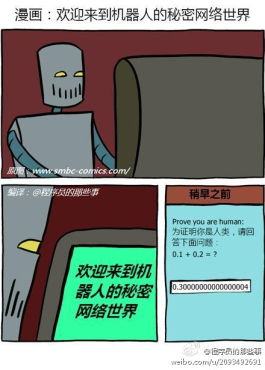

你使用的语言并不烂,它能够做浮点数运算。计算机天生只能存储整数,因此它需要某种方法来表示小数。这种表示方式会带来某种程度的误差。这就是为什么往往 0.1 + 0.2 不等于 0.3。

为什么会这样?

实际上很简单。对于十进制数值系统(就是我们现实中使用的),它只能表示以进制数的质因子为分母的分数。10 的质因子有 2 和 5。因此 1/2、1/4、1/5、1/8和 1/10 都可以精确表示,因为这些分母只使用了10的质因子。相反,1/3、1/6 和 1/7 都是循环小数,因为它们的分母使用了质因子 3 或者 7。二进制下(进制数为2),只有一个质因子,即2。因此你只能精确表示分母质因子是2的分数。二进制中,1/2、1/4 和 1/8 都可以被精确表示。但是,1/5 或者 1/10 就变成了循环小数。所以,在十进制中能够精确表示的 0.1 与 0.2(1/10 与 1/5),到了计算机所使用的二进制数值系统中,就变成了循环小数。当你对这些循环小数进行数学运算时,并将二进制数据转换成人类可读的十进制数据时,会对小数尾部进行截断处理。

Floating Point Math

Your language isn't broken, it's doing floating point math. Computers can only natively store integers, so they need some way of representing decimal numbers. This representation comes with some degree of inaccuracy. That's why, more often than not, .1 + .2 != .3.

Why does this happen?

It's actually pretty simple. When you have a base 10 system (like ours), it can only express fractions that use a prime factor of the base. The prime factors of 10 are 2 and 5. So 1/2, 1/4, 1/5, 1/8, and 1/10 can all be expressed cleanly because the denominators all use prime factors of 10. In contrast, 1/3, 1/6, and 1/7 are all repeating decimals because their denominators use a prime factor of 3 or 7. In binary (or base 2), the only prime factor is 2. So you can only express fractions cleanly which only contain 2 as a prime factor. In binary, 1/2, 1/4, 1/8 would all be expressed cleanly as decimals. While, 1/5 or 1/10 would be repeating decimals. So 0.1 and 0.2 (1/10 and 1/5) while clean decimals in a base 10 system, are repeating decimals in the base 2 system the computer is operating in. When you do math on these repeating decimals, you end up with leftovers which carry over when you convert the computer's base 2 (binary) number into a more human readable base 10 number.

Below are some examples of sending .1 + .2 to standard output in a variety of languages.

| Language | Code | Result |

|---|---|---|

| ABAP | WRITE / CONV f( '.1' + '.2' ).AndWRITE / CONV decfloat16( '.1' + '.2' ). |

0.30000000000000004And0.3 |

| Ada | with Ada.Text_IO; use Ada.Text_IO; procedure Sum is A : Float := 0.1; B : Float := 0.2; C : Float := A + B; begin Put_Line(Float'Image(C)); Put_Line(Float'Image(0.1 + 0.2)); end Sum; |

3.00000E-01 3.00000E-01 |

| APL | 0.1 + 0.2 |

0.30000000000000004 |

| AutoHotkey | MsgBox, % 0.1 + 0.2 |

0.300000 |

| awk | echo | awk '{ print 0.1 + 0.2 }' |

0.3 |

| bc | 0.1 + 0.2 |

0.3 |

| C | #include<stdio.h> int main(int argc, char** argv) { printf("%.17f\n", .1+.2); return 0; } |

0.30000000000000004 |

| Clojure | (+ 0.1 0.2) |

0.30000000000000004 |

Clojure supports arbitrary precision and ratios. (+ 0.1M 0.2M) returns 0.3M, while (+ 1/10 2/10) returns 3/10. |

||

| ColdFusion | <cfset foo = .1 + .2> <cfoutput>#foo#</cfoutput> |

0.3 |

| Common Lisp | * (+ .1 .2)And* (+ 1/10 2/10)And* (- 1.2 1.0) |

0.3And3/10And0.20000005 |

| C++ | #include <iomanip> std::cout << std::setprecision(17) << 0.1 + 0.2 |

0.30000000000000004 |

| Crystal | puts 0.1 + 0.2Andputs 0.1_f32 + 0.2_f32 |

0.30000000000000004And0.3 |

| C# | Console.WriteLine("{0:R}", .1 + .2);AndConsole.WriteLine("{0:R}", .1m + .2m); |

0.30000000000000004And0.3 |

C# has support for 128-bit decimal numbers, with 28-29 significant digits of precision. Their range, however, is smaller than that of both the single and double precision floating point types. Decimal literals are denoted with the m suffix. |

||

| D | import std.stdio; void main(string[] args) { writefln("%.17f", .1+.2); writefln("%.17f", .1f+.2f); writefln("%.17f", .1L+.2L); } |

0.29999999999999999 0.30000001192092896 0.30000000000000000 |

| Dart | print(.1 + .2); |

0.30000000000000004 |

| dc | 0.1 0.2 + p |

.3 |

| Delphi XE5 | writeln(0.1 + 0.2); |

3.00000000000000E-0001 |

| Elixir | IO.puts(0.1 + 0.2) |

0.30000000000000004 |

| Elm | 0.1 + 0.2 |

0.30000000000000004 |

| elvish | + .1 .2 |

0.30000000000000004 |

elvish uses Go’s double for numerical operations. |

||

| Emacs Lisp | (+ .1 .2) |

0.30000000000000004 |

| Erlang | io:format("~w~n", [0.1 + 0.2]). |

0.30000000000000004 |

| FORTRAN | program FLOATMATHTEST real(kind=4) :: x4, y4 real(kind=8) :: x8, y8 real(kind=16) :: x16, y16 ! REAL literals are single precision, use _8 or _16 ! if the literal should be wider. x4 = .1; x8 = .1_8; x16 = .1_16 y4 = .2; y8 = .2_8; y16 = .2_16 write (*,*) x4 + y4, x8 + y8, x16 + y16 end |

0.300000012 0.30000000000000004 0.300000000000000000000000000000000039 |

| Gforth | 0.1e 0.2e f+ f. |

0.3 |

| GHC (Haskell) | * 0.1 + 0.2 :: DoubleAnd* 0.1 + 0.2 :: Float |

* 0.30000000000000004And* 0.3 |

Haskell supports rational numbers. To get the math right, 0.1 + 0.2 :: Rational returns 3 % 10, which is exactly 0.3. |

||

| Go | package main import "fmt" func main() { fmt.Println(.1 + .2) var a float64 = .1 var b float64 = .2 fmt.Println(a + b) fmt.Printf("%.54f\n", .1 + .2) } |

0.3 0.30000000000000004 0.299999999999999988897769753748434595763683319091796875 |

| Go numeric constants have arbitrary precision. | ||

| Groovy | println 0.1 + 0.2 |

0.3 |

| Literal decimal values in Groovy are instances of java.math.BigDecimal | ||

| Hugs (Haskell) | 0.1 + 0.2 |

0.3 |

| Io | (0.1 + 0.2) print |

0.3 |

| Java | System.out.println(.1 + .2);AndSystem.out.println(.1F + .2F); |

0.30000000000000004And0.3 |

| Java has built-in support for arbitrary precision numbers using the BigDecimal class. | ||

| JavaScript | console.log(.1 + .2); |

0.30000000000000004 |

| The decimal.js library provides an arbitrary-precision Decimal type for JavaScript. | ||

| Julia | .1 + .2 |

0.30000000000000004 |

Julia has built-in rational numbers support and also a built-in arbitrary-precision BigFloat data type. To get the math right, 1//10 + 2//10 returns 3//10. |

||

| K (Kona) | 0.1 + 0.2 |

0.3 |

| Lua | print(.1 + .2)Andprint(string.format("%0.17f", 0.1 + 0.2)) |

0.3And0.30000000000000004 |

| Mathematica | 0.1 + 0.2 |

0.3 |

| Mathematica has a fairly thorough internal mechanism for dealing with numerical precision and supports arbitrary precision. | ||

| Matlab | 0.1 + 0.2Andsprintf('%.17f',0.1+0.2) |

0.3And0.30000000000000004 |

| MySQL | SELECT .1 + .2; |

0.3 |

| Nim | echo(0.1 + 0.2) |

0.3 |

| Objective-C | #import <Foundation/Foundation.h> int main(int argc, const char * argv[]) { @autoreleasepool { NSLog(@"%.17f\n", .1+.2); } return 0; } |

0.30000000000000004 |

| OCaml | 0.1 +. 0.2;; |

float = 0.300000000000000044 |

| Perl 5 | perl -E 'say 0.1+0.2'Andperl -e 'printf q{%.17f}, 0.1+0.2' |

0.3And0.30000000000000004 |

| Perl 6 | perl6 -e 'say 0.1+0.2'Andperl6 -e 'say (0.1+0.2).base(10, 17)'Andperl6 -e 'say 1/10+2/10'Andperl6 -e 'say (0.1.Num + 0.2.Num).base(10, 17)' |

0.3And0.3And0.3And0.30000000000000004 |

| Perl 6, unlike Perl 5, uses rationals by default, so .1 is stored something like { numerator => 1, denominator => 10 }. To actually trigger the behavior, you must force the numbers to be of type Num (double in C terms) and use the base function instead of the sprintf or fmt functions (since those functions have a bug that limits the precision of the output). | ||

| PHP | echo .1 + .2; var_dump(.1 + .2); |

0.3 float(0.30000000000000004441) |

PHP echo converts 0.30000000000000004441 to a string and shortens it to “0.3”. To achieve the desired floating point result, adjust the precision ini setting: ini_set(“precision”, 17). |

||

| PicoLisp | [load "frac.min.l"] # https://gist.github.com/6016d743c4c124a1c04fc12accf7ef17And[println (+ (/ 1 10) (/ 2 10))] |

(/ 3 10) |

| You must load file “frac.min.l”. | ||

| Postgres | SELECT select 0.1::float + 0.2::float; |

0.3 |

| Powershell | PS C:\>0.1 + 0.2 |

0.3 |

| Prolog (SWI-Prolog) | ?- X is 0.1 + 0.2. |

X = 0.30000000000000004. |

| Pyret | 0.1 + 0.2And~0.1 + ~0.2 |

0.3And~0.30000000000000004 |

Pyret has built-in support for both rational numbers and floating points. Numbers written normally are assumed to be exact. In contrast, RoughNums are represented by floating points, and are written with a ~ in front, to indicate that they are not precise answers. (The ~ is meant to visually evoke hand-waving.) Therefore, a user who sees a computation produce ~0.30000000000000004knows to treat the value with skepticism. RoughNums also cannot be compared directly for equality; they can only be compared up to a given tolerance. |

||

| Python 2 | print(.1 + .2)Andfloat(decimal.Decimal(".1") + decimal.Decimal(".2"))And.1 + .2 |

0.3And0.3And0.30000000000000004 |

| Python 2’s “print” statement converts 0.30000000000000004 to a string and shortens it to “0.3”. To achieve the desired floating point result, use print(repr(.1 + .2)). This was fixed in Python 3 (see below). | ||

| Python 3 | print(.1 + .2)And.1 + .2 |

0.30000000000000004And0.30000000000000004 |

| Python (both 2 and 3) supports decimal arithmetic with the decimal module, and true rational numbers with the fractions module. | ||

| R | print(.1+.2)Andprint(.1+.2, digits=18) |

0.3And0.30000000000000004 |

| Racket (PLT Scheme) | (+ .1 .2)And(+ 1/10 2/10) |

0.30000000000000004And3/10 |

| Ruby | puts 0.1 + 0.2Andputs 1/10r + 2/10r |

0.30000000000000004And3/10 |

| Ruby supports rational numbers in syntax with version 2.1 and newer directly. For older versions use Rational. Ruby also has a library specifically for decimals: BigDecimal. | ||

| Rust | extern crate num; use num::rational::Ratio; fn main() { println!("{}", 0.1 + 0.2); println!("1/10 + 2/10 = {}", Ratio::new(1, 10) + Ratio::new(2, 10)); } |

0.30000000000000004 1/10 + 2/10 = 3/10 |

| Rust has rational number support from the num crate. | ||

| SageMath | .1 + .2AndRDF(.1) + RDF(.2)AndRBF('.1') + RBF('.2')AndQQ('1/10') + QQ('2/10') |

0.3And0.30000000000000004And[“0.300000000000000 +/- 1.64e-16”]And3/10 |

| SageMath supports various fields for arithmetic: Arbitrary Precision Real Numbers, RealDoubleField, Ball Arithmetic, Rational Numbers, etc. | ||

| scala | scala -e 'println(0.1 + 0.2)'Andscala -e 'println(0.1F + 0.2F)'Andscala -e 'println(BigDecimal("0.1") + BigDecimal("0.2"))' |

0.30000000000000004And0.3And0.3 |

| Smalltalk | 0.1 + 0.2. |

0.30000000000000004 |

| Swift | 0.1 + 0.2AndNSString(format: "%.17f", 0.1 + 0.2) |

0.3And0.30000000000000004 |

| TCL | puts [expr .1 + .2] |

0.30000000000000004 |

| Turbo Pascal 7.0 | writeln(0.1 + 0.2); |

3.0000000000E-01 |

| Vala | static int main(string[] args) { stdout.printf("%.17f\n", 0.1 + 0.2); return 0; } |

0.30000000000000004 |

| Visual Basic 6 | a# = 0.1 + 0.2: b# = 0.3 Debug.Print Format(a - b, "0." & String(16, "0")) Debug.Print a = b |

0.0000000000000001 False |

Appending the identifier type character # to any identifier forces it to Double. |

||

| zsh | echo "$((.1+.2))" |

0.30000000000000004 |

发表评论